Parsing Yelp Dataset in PostgreSQL #

The dataset contains information about businesses registered at Yelp.com, users, reviews, tips, and check-ins.

The data is serialized in JSON format. However, not in a form you would expect! Each lines in the JSON files are serialized as a different JSON object. Hence, when the files are loaded using a JSON decoder in Python the decoder fails to read the files accurately. One way to solve the problem is to load the JSON file in memory, decode each line separately using a JSON decoder, append each JSON object into a list in Python, and then parse the data from the list. The code for this would be as following.

import psycopg2

import json

# Load the whole JSON file in memory

f = open('yelp_academic_dataset_tip.json', 'r')

# Initialize an empty list for temporarily holding JSON objects as list

data = []

# Append each line/JSON object in a list

for line in f:

data.append(json.loads(line))

# Open a connection to the database

db_conn = psycopg2.connect("dbname='database_name' host='server_address' user='username' password='password'")

# Iteratively commit the data from the list in database

i=0

while i < len(data):

cur = db_conn.cursor()

cur.execute("insert into Tip (user_id, business_id, tip_text, tip_date, likes, type) values (%s, %s, %s, %s, %s, %s)", (data[i]['user_id'], data[i]['business_id'], data[i]['text'], data[i]['date'], data[i]['likes'], data[i]['type']))

db_conn.commit()

i += 1

# Close database connection

db_conn.close()

# Close JSON file

f.close()

The above approach, however, has a major shortcoming. We are loading the entire JSON file in memory, and then transforming it into a list object in Python before parsing it into a database. This’ll take a large amount of memory for some files in the dataset (as some of these are more than 1GB in size). A clever way to encounter this could be to read the JSON file line-by-line, manipulate it and parse it into the database. This can be achieved with a simple change in the parser script.

# Initialize an empty list for temporarily holding a JSON object

data = []

# Open a JSON file from the dataset and read data line-by-line iteratively

with open('yelp_academic_dataset_review.json') as fileobject:

for line in fileobject:

data = json.loads(line)

The above approach also doesn’t require to close the file.

The parser code can be found on GitHub.

Setting Up PostgreSQL and Python on Ubuntu 16.04LTS #

I have setup PostgreSQL and Python modules for parsing the dataset in both local and remote machines. But in both cases, the base system was the same.

OS: Ubuntu 16.04LTS

Database: PostgreSQL 9.5

Language: Python 3.5

Database management tool: pgAdmin 3 or 4

Before starting, I am assuming you have installed Ubuntu as a virtual machine or as a standalone local machine or as a remote server. Once you’re done, you can move on to install PostgeSQL on Ubuntu.

First, refresh the apt software package list.

$ sudo apt-get update

Now we can use install the PostgreSQL package, along with the -contrib package. Since we are installing from a pre-packaged distribution of PostgreSQl, the -contrib package is needed to install additional modules of PostgeSQL.

$ sudo apt-get install postgresql postgresql-contrib

If the installation process is successful, it will create a user account named postgres in the system. We can use the postgres account to test the installation. For that, we need to change to the user postgres from terminal.

$ sudo su postgres

Now use the following command to access PostgreSQL from the terminal.

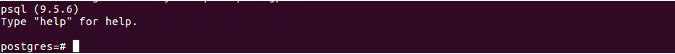

$ psql

You’ll see something like this on your terminal:

We can use the account postgres to access and work on the database. However, as a rule of thumb, it is better to create a superuser and access the database using that account. To create a superuser account, we need to get out of Postgres front-end and get back to the user account postgres. use the following command to get out of Postgres terminal front-end.

# \q

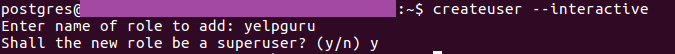

Now that we are in postgres system account, use the following command to create a superuser in interactive mode.

We have created a superuser account yelpguru. Now we can setup a password for the user from the Postgres terminal front-end.

# ALTER USER yelpguru PASSWORD 'your_password';

# \q

Switch to your system user account from the postgres account.

$ exit

If we want to use access this database remotely, we need to setup Postgres for remote access using pgAdmin or any other database management tool. We need to change two files to achieve this - postgresql.conf and pg_hba.conf. We’ll need to declare the allowed IP address range in these files. For this case, I’ll allow all IP address to access Postgres remotely.

First, open the file postgresql.conf using the following command.

$sudo nano /etc/postgresql/9.5/main/postgresql.conf

Look for the line #listen_addresses = 'localhost' and change it to listen_addresses = '*'.

Save the changes. Next, open the file pg_hba.conf.

$ sudo nano /etc/postgresql/9.5/main/pg_hba.conf

Add the line host all all 0.0.0.0/0 md5 in the appropriate block.

Save the changes and exit. Restart Postgres from terminal for the changes to take effect.

$ sudo service postgresql restart

Now install pgAdmin on your system. If you’re using Postgres locally, you can install it from the terminal.

$ sudo apt-get install pgadmin3

You can now login to Postgres through pgAdmin using the superuser user credentials.

The next step is to set up Python modules for parsing the dataset. I am using Python 3 for the project, along with psycopg2 module to connect to Postgres. but before we can install psycopg2 on Python 3, we need to install pip3 and libpq-dev.

$ sudo apt-get install python3-pip

$ sudo apt-get install libpq-dev

Now we can istall psycopg2 on Python3.

$ sudo pip3 install psycopg2

Access python3 from the terminal, and import psycopg2 to check if it has been installed properly. I have ran into problems related to permissions when installing psycopg2 on a local machine using sudo. If you run into such problems, switch to root using sudo su and run pip3 install psycopg2.

If you have been able to setup all these tools, then you’re ready to parse the data on a Postgres database using the parser I shared on GitHub.